- The Cerberus Project

- Introduction

- 1. Chapter 0 - Pitch and Abstract

- 2. Chapter 1 - Pitch and Summer Work

- 3. Chapter 2 - Fall 2014 Work

- 4. Chapter 3 - Spring 2015 Work

- 5. Chapter 4 - Architecture Software, Dependencies and Provisioning

- 6. Chapter 5 - Current Hardware Configurations

- 7. Chapter 6 - Monitoring the Cluster

- 8. Chapter 7 - Current Limitations of the Project

- 9. Chapter 8 - Utilizing the Cerberus Program

- 10. Chapter 9 - Performance of the Project

- 11. Chapter 10 - Final Results and Special Thanks

Chapter 2:

Fall 2014 Semester and Weekly progress

Fall semester, as it has drawn to a close, was a period of intense continued research and the implementation of the overarching systems administration required by a project of this depth and magnitude. All of the preliminary research has been updated to status completed, as has the vast majority of the cluster building, although continued expansion is still a progressive and continuous phase. The vast majority of software, hardware and the methods to bind them together have been acquired and compiled and the project is still on schedule. Project management has been an integral component of keeping the project on track and will also be discussed as well in an effort to maintain comprehensive coverage of non-proprietary information of the project.

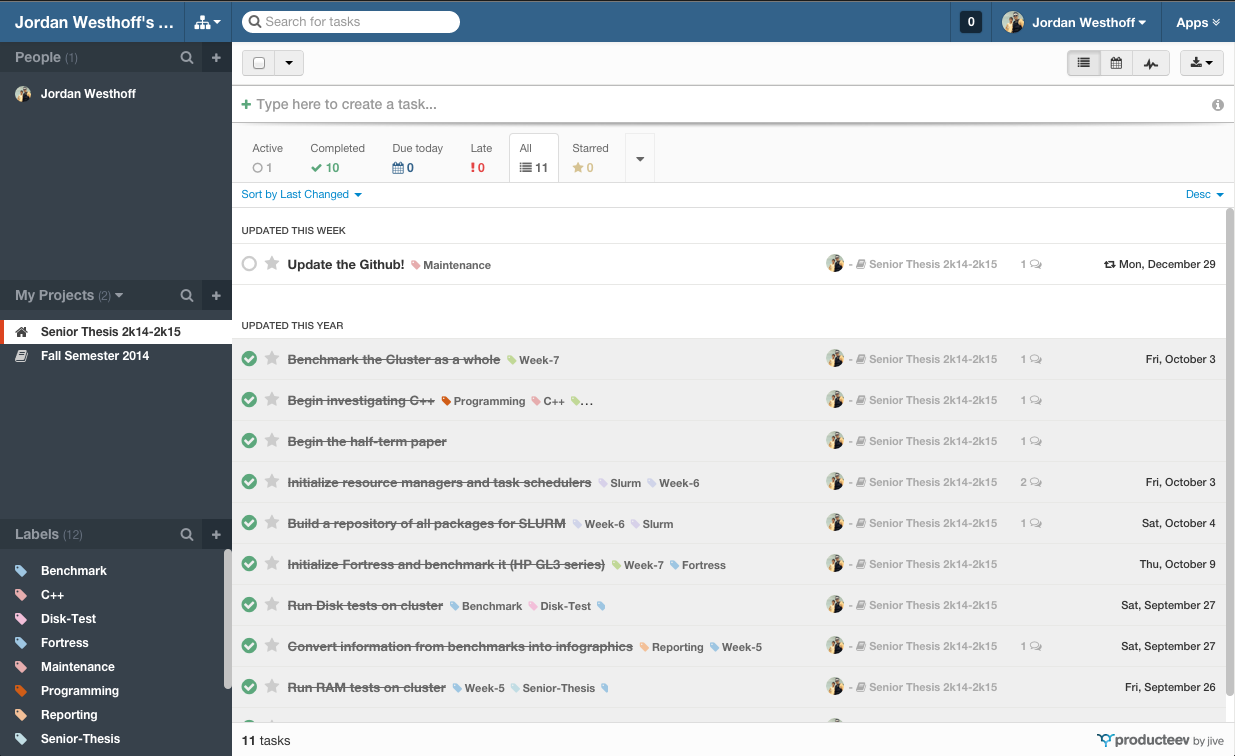

Project Management - Producteev

Managing the project is a significant workload of its own right. Between gathering all of the tasks to be completed and managing all of the time constraints that they require is the most significant facet of project management. After looking through a wide variety of products and services, a free service known as Producteev was implemented, with great success. Producteev spans desktop operating systems, Apple iOS and Android which means it is always accessible regardless of where work is being accomplished. Producteev allows entire projects to be defined within the dashboard, in addition to sub-projects and tasks within those projects.

All of these can be sourced to different people, or in this case, all tagged to myself. Due dates and notifications as well as intelligent tagging and searching are implemented as well which makes managing tasks, meetings and upcoming deadlines a simple procedure. Producteev is intended to be scalable from single use cases up to enterprise collections of workers. Producteev was also chosen to be implemented here due to its free use case for educational use. This means that all of the features of Producteev are free for use based on the terms of the project and the scale of its implementation - less than ten users and less than 10 open projects. This means that potential collaboration can be opened up between multiple parties and advisors, should it be necessary.

Since pre-defined Gandt charts and expected productivity timelines were generated, entering them into Producteev was simple. In addition to this, daily update emails and weekly recap reports were generated by Producteev to help monitor achieved progress and tasks left to do on a daily and weekly timeframe. Producteev was also used to manage co-existing classes and assignments outstanding which made is an excellent, holistic tool for the project.

Full Timelines - Fall Semester

The following is a complete week by week synopsis of all of the work completed on the thesis during the fall term. Any unfamiliar terms will be discussed forthcoming in their proper sections. All of the information is drawn from internal documentation based on its inclusion into the thesis project.

Week 1

The first week of the semester relied on several small tasks. These included appraising the progress made over the summer and then determining its results and impact on the project. This week was spent mostly moving equipment from my previous labs into new labs as well as reconfiguring current networking. In addition, the week was spent determining goals for the semester and loose ideals as to what acceptable time frames were for deadlines.

Week 2

Week 2 was similar to Week 1 - little physical progress was made but a lot of required ground work and pre-production was conducted and established. Of this, project management software was chosen, and its implementation was started. Producteev was chosen as the primary software package and it has served well over the course of the semester. Producteev allows for desktop and mobile connection, with notifications and time tables. Producteev also features the ability to collaborate project requirements amongst other registered members, all of which is private and unique to each project as set by the user. Producteev also provides the ability to use other tools such as Gandt charts and other organizational tools. Senior Forum discussed the presentations required and I chose the first slot so preparation for that started as well.

Week 3

Week 3 was spent mostly preparing the presentation to give to the MPS senior class. In addition, serveral new systems were added to the stack and had to be configured. In preparation of these nodes, work on a basic benchmarking software set was begun.

Week 4

This week was spent finishing the presentation as well as polishing the initial benchmarking scripts program. This tested CPU speed and disk speed and then copied the results to a server to be logged. It wasn't elegant but it gave some initial idea of performance although it will be replaced later for an elegant solution. This week I also set up a GitHUb repository for the project which now houses all of the code, commits and in future weeks, a wiki page.

Week 5

This week was comprised entirely of memory (RAM) testing, disk testing and some basic infographic generation. In addition to this, with a repo made for the project code, another was made to house SLURM and all of its dependencies. This was not completed this week.

Week 6

This week was an entire comprehensive look at resource managers, SLURM was re-examined, Maui+Torque and other solutions like GEARS, OpenPBS and ROCKS Cluster were examined as well. SLURM won out, and was decided to be the continued choice for cluster resource management. In addition, a new server, Fortress (HP GL3 series) was added. The server is underpowered compared to the rest of the cluster but will still be useful for other aspects of the project. In addition, the primary NAS server was disk tested as well and its results logged.

Week 7

Given inbound midterms, this was an easier week that revolved mostly around investigating C++, learning the basics of compiling code and benchmarking the entire cluster as a whole. Also, passwordless SSH was installed and configured so each node can speak with every other node in the cluster without constant password authentication.

Week 8

\noindent The comprehensive thesis paper was started, chosen to cover the architecture, the available benchmarking data as well as the design of the current systems. The GitHub wiki page was also created and this took some time to populate with info (timelines, front page, etc).

Week 9

Continued work on the paper. In addition, common C++ image processing tools are now being tested, these include compiling and running open.CV, dc.RAW and cimg. Lots of testing and trying things, not a lot to write about.

Week 10

This week it was discovered that the distribution of CentOS being used on the head node was not x64 bit but rather the deprecated 32-bit i686 architecture. The week was spent upgrading the head node and testing the full install scripts to ensure easier provisioning. In addition, PTS (Phoronix Testing Suite) was now implemented to replace my older, less elegant home brewed solutions. I also spent a great deal of time installing the PTS Phoromatic server and the web dashboard.

Week 11

More C++ was worked on this week. In addition, PTS has an integrated dc.RAW test profile so I began using that. This operation uses a really basic TIFF -> PNG operation though which isn't what I'll be using dc.RAW for so I also began working on a custom benchmark through PTS that uses dc.RAW. Thanks to the Research Computing office I was able to begin adding satellite nodes for testing and comparing enterprise vs desktop hardware.

Week 12

Five new intruder nodes acquired thanks to the good will of the computer engineering department. These units were registered and brought on the network after much stress (thanks RIT RESNET). Cent initialized, updated, provisioned and the benchmarked Phoromatic server was set up to run with each node. Image processing setup and custom dc.RAW testing built, just needs to be implemented once SLURM is stable. One of the largest compute nodes is also running old i686 architecture - Crawler had to be converted to x64 arch for better RAM and CPU utilization. In addition to this all units were benchmarked with 32-bit and 64-bit performance comparisons.

Week 13

Week 13 brought the implementation of Ansible which is an open-source large scale provisioning tool based on SSH. Ansible was deemed worthwhile and after a full week of testing is now replacing all of the original initialization scripts because Ansible can run operations on the entire cluster at once (like a yum update for instance) and report with detailed information. Ansible saves massive time in a variety of tasks and is now integral to the administration of the cluster.

Week 14

This week Zabbix was installed to provide constant monitoring of every node in the cluster. Zabbix exists based on a server -> agent SSH handshake. After a couple of days the server was configured, all of the nodes and all of the satellite nodes were configured as well. This provides around the clock monitoring and alerts and updates if cluster nodes go down or overheat (among 80 other markers and statistics that can be recorded or monitored.)

Week 15

Thanksgiving break and all of the rest of the time before finals were spent cleaning up code, researching C++, and converting old scripts to Ansible YAML play books. This speeds things up.

Week 16

This week was relatively light. I blew a breaker in UC and left the building without power for two hours (cool but university wasn't thrilled). The full cluster is a power monster and I need to look into better power management, or more power. Or both.

Week 17

This week was entirely unproductive given the presence of finals. The cluster was prepped and configured to run off of IPMI for break so more work can be done remotely and it can be set to run full bore once intersession starts.